Five techniques Conversion Optimisation Experts use to get the most value from their CRO efforts

Conversion rate optimisation (CRO) can be frustrating when you have setup a CRO programme and are working hard on it, but not getting the results you expected. This article details five tips we find are important to apply to get your CRO efforts back on track. We have used this approach to deliver double, and sometimes triple-digit, sales increases. Implement this advice into your CRO programme and transform your business.

5 Tips to improve CRO

-

Tip 1: Avoid ‘best practice’

If you get your ideas for what to test on your website from downloading an expensive report then it’s odds-on you are seeing mixed results.

What works on one site isn’tt guaranteed to work on your site and the tactics involved in persuading visitors to purchase varies enormously from site to site.

You need to get into the mind-set of your customers, listen to what they are telling you and test these improvements. After all, there’s no such thing as ‘best practice’ customers.

For example, ‘best practice’ says to steer clear of pop-ups to gain email subscribers. They are bound to hurt sales and annoy website visitors, they say. But in split tests we have seen little evidence of this. It’s always worth testing – your visitors will be different.

-

Tip 2: Analytics data: use sparingly

The difference between a successful CRO programme and one producing so-so results is what you do with your web analytics data.

Your analytics data simply needs to answer these two questions:

- What percentage of your visitors are dropping off your website without converting?

- Where are they dropping off?

Only web analytics data can tell you where your website’s pain points are and where the opportunities lie. Don’t get distracted by the mass of other reports you can download, pivot and deep-dive to your heart’s content.

The best way to use your analytics data is to decide where to focus your efforts and what questions you need to get answers to.

-

Tip 3: Your customers’ voice: a hidden goldmine

Voice-of-customer data answers the critical question you can easily forget to ask:'Why are our visitors dropping out?'

Getting answers to this question lies beyond traditional website analytics.

You will already have access to key insights from your customers. All you need to do is tap into them. Customer support emails, on-site search and the knowledge of your customer service staff are all examples of where the answers can be found.

Other powerful approaches for gathering voice-of-customer data are on-site surveys and usability testing.

- On-site surveys. Collect responses in free text fields and let your users tell you their problems in their own words. This provides you with better insight into their problems, frustrations and objections.

- Usability testing. In order to get the most from your usability testing, you need to test with the right people – qualified visitors. These are visitors who are interested in your product or service and who may or may not be persuaded to buy from you. Ethnio is a great tool for identifying and recruiting qualified usability testers directly from your website.

-

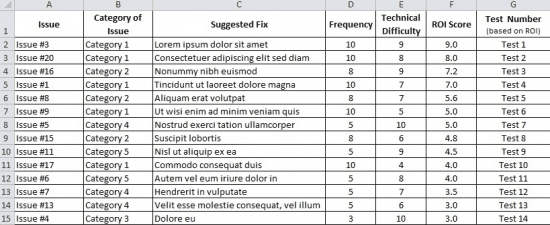

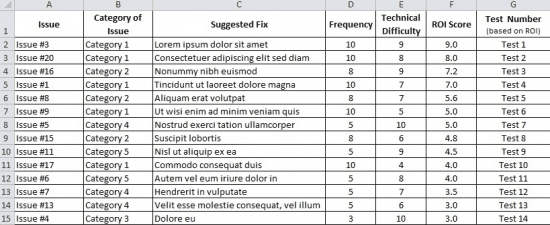

Tip 4: Prioritise your roadmap of split tests

Although it might feel daunting, prioritising your long lists of issues is far easier than you might think.

- Categorise your issues. Go through the issues and group similar responses together (e.g. value proposition, unclear pricing, missing functionality etc.)

- Rate frequency. Count how many insights fall into each category and rate each one from 1 (not many issues) to 10 (lots of issues).

- Suggest a fix. Propose a fix for each issue (e.g. create a value proposition to communicate breadth of product, add product filters to search results page).

- Rate difficulty. Rate each fix by the technical difficulty / cost of development (1 being difficult/expensive and 10 being easy/cheap).

- Determine ROI score. Multiply the frequency and difficulty scores and divide by 10.

- Sort by ROI. Sort your list by your ROI score, highest to lowest.

And voila! You’re now looking at your prioritised road map of improvements that you’re ready to split test.

-

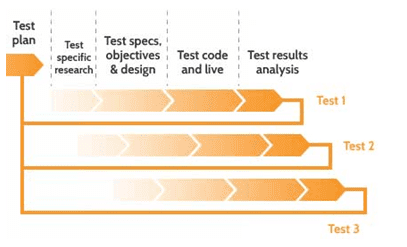

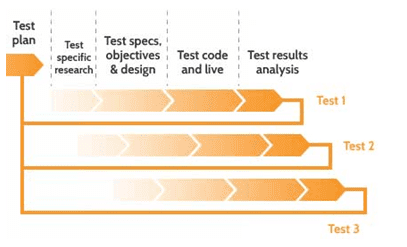

Tip 5: Give your split tests the best chance of success

Before testing:

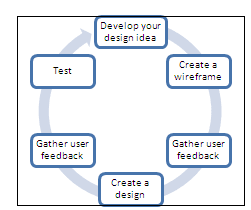

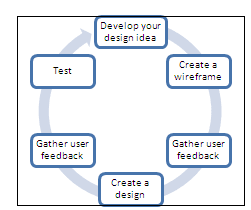

Before spending your valuable time and money on website development costs, get user feedback on proposed split tests. Download an inexpensive wireframing tool (we use Balsamiq) to create a wireframe of the proposed change. Using the qualified visitors you recruited using Ethnio, ask them what they think - is it an improvement? Does it make the page easier to use? Is the content more persuasive?

If not, no problem. You’ve hardly spent a penny. Go back to the drawing board, implement the feedback you received and go through the process again.

Once your user testers approve your wireframe, turn it into a design (just a JPEG will do) and go through the same process again until you’re confident that your test ideas have a strong chance of success.

Implementing a test:

We recommend these two techniques to ensure you get the most from your test.

- Run an A/A/B/n test. Running the experiment with two control pages enables you to verify the data as you go and, if there are problems, you’re able to identify and resolve them without losing time.

- If a test loses, make sure you know why. Use a survey tool (e.g. Qualaroo) on both the control and variation pages to gather user insight and reveal the reasons why your users didn’t behave as expected.

Interpreting test results:

Three pieces of useful advice are:

- Don’t pay much attention to the initial results. Of course, check the results to make sure your test is not having a negative impact, but don’t read too much into early indications.

- Don’t stop the test too early. Often a test will reach a high level of statistical confidence (we use 95% or more before declaring a winner) and then drop back down again.

We have seen some companies stopping a split test after just three days and with less than 95% confidence levels. A general rule of thumb is not to call a test before you have at least 100 conversions for the winning variation and the test has been running for at least 2 weeks to ensure that any natural variations in weekday/weekend behaviour are smoothed out.

- Don’t read the test results incorrectly. This is best explained with an example:

This does NOT mean there’s a 99.9% chance you will get an uplift of 184.5%.

It means that there’s a 99.9% [A] chance your variation is better than the control page and that, during the test, the software observed an average uplift of 184.5% [B].

These results also show a +/- 0.36% deviation [C] (sometimes called limit of error) from the average for the control and +/-0.62% [D] deviation for the variation. When calculating the additional revenue generated by implementing the winning variation, these deviations should be taken into account.

Summary

You now know more about how to start and run a successful conversion rate optimisation programme than 95% of digital marketers.

It all boils down to five simple techniques:

- Stop relying on ‘best practice’. Applying a change simply because you read that it improved another website is an incredibly high-risk strategy.

- Use web analytics data sparingly. Focusing on this data alone only answers the question ‘where are my website conversion killers’. Over-reliance on this data will seriously impede your CRO efforts.

- Recognise the importance of voice-of-customer data. Use high-quality voice-of-customer data to develop your idea generation and transform your split tests from losers to winners.

- Prioritise your issues systematically. Use frequency and difficulty ratings to identify the likely ROI of each issue and proposed fix.

- Run and read split tests like the experts. Gather user feedback before development. Set your tests up and read the results correctly.

This easy-to-follow, repeatable system has helped us generate substantial gains for our clients.

If you have comments or questions on this approach, leave a comment below.

If you have found this post useful you might be interested in downloading the full whitepaper. ‘The Advanced Guide to Conversion Rate Optimisation for E-commerce Managers and Marketing Directors’

This has 10 extra sections including:

- The problems that even companies with the highest paid analysts are struggling with.

- Where your analytics tool is letting you down.

- 12 ways visitors to your website are different from other websites’ visitors.

- The real reason that button colour doesn’t matter to your visitors.

- How to use your analytics package to map your way to successful conversion.

- How, in just 5 minutes, you can identify your visitors’ biggest objections.

- Common mistakes to avoid when choosing survey questions.

- The hidden benefit of Live Chat.

- How to get laser-like focus on conversion killers from just one page.

- How to incorporate deviations when reporting projected revenue from implementing winning split test variations.

Thanks to Dan Croxen-John for sharing his advice and opinions in this post. Dan is CEO of AWA digital, a

conversion rate optimisation agency with offices in London, York and Cape Town. You can connect with him on

LinkedIn or follow AWA Digital on

Twitter.

Thanks to Dan Croxen-John for sharing his advice and opinions in this post. Dan is CEO of AWA digital, a

Thanks to Dan Croxen-John for sharing his advice and opinions in this post. Dan is CEO of AWA digital, a