A simple explanation of the Robots.txt file for marketers

The robots.txt file, also known as the Robots Exclusions Protocol, is an essential part of your website. It provides instructions to the search engine robots that crawl your site. Get it wrong, and you could damage or even destroy your search engine visibility.

The robots.txt file, also known as the Robots Exclusions Protocol, is an essential part of your website. It provides instructions to the search engine robots that crawl your site. Get it wrong, and you could damage or even destroy your search engine visibility.

In this tutorial on robots.txt I'll explain the what, why and how of robots.txt for non SEO specialists so you can ask the right questions or have the right discussions about it with your specialists.

What is robots.txt?

Any webmaster worth their salt will know how important the robots.txt file is. Essentially a list of instructions for search engine robots (or web crawlers), it indicates any areas of your website you do not wish to crawled (and potentially indexed) by search engines. Getting it wrong could lead to your website disappearing from the search results entirely, or indeed never appearing at all!.

How does robots.txt work?

When crawling your site, the search engine robots first port of call is to search for your robots.txt file. This will tell it where it is allowed to crawl (visit) and index (save) on the search engine results.

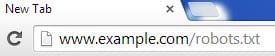

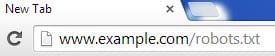

The robots.txt file of any website will live in the same place – at domain.com/robots.txt (obviously the ‘domain.com’ should be changed to your actual domain).

To check that you have a robots.txt file in place, simply navigate to your website and add /robots.txt at the end of your domain:

The web crawler will then take on board the instructions within your robots.txt file and omit any pages you have asked be omitted from its crawl.

When is a robots.txt file useful?

Robots.txt files are useful in the following circumstances:

- If you want search engines to ignore any duplicate pages on your website

- If you don’t want search engines to index your internal search results pages

- If you don’t want search engines to index certain areas of your website or a whole website

- If you don’t want search engines to index certain files on your website (images, PDFs, etc.)

- If you want to tell search engines where your sitemap is located

There are several reasons why a robot.txt file would be a beneficial addition to your website, including:

Duplicate content

You may have duplicate content on your website. This is not uncommon, and can be driven by elements such as dynamic URLs, where the same content is served by various URLs depending on how the user came to it.

Though not uncommon, duplicate content is frowned upon by the search engines, and should be avoided or negated wherever possible. The robots.txt file enables you to do this by instructing web crawlers not to crawl duplicate versions.

In these situations, it also makes sense to employ canonical tagging.

Internal search results

If you have an internal search function on your website, you may choose to omit your internal search results pages from the search engines.

This is because the search results pages on your site are unlikely to deliver value to anyone beyond the searcher that produces them, and it is better to keep your Google search results listings full of high quality content that serves a purpose to anyone that finds it.

Ignoring password protected areas, files, or intranets

You can instruct web crawlers to ignore certain areas or files on your website such as employee intranets.

You may have legal reasons for doing this, perhaps data protection of employee information, or it could be that these areas just aren’t relevant to external searchers so you don’t want them appearing in the search results.

XML Sitemap Location

Another tool used by the search engine robots when crawling your site is your XML sitemap. This is a plain text version of your sitemap which lists the locations of all of the pages of your site.

Your robots.txt file should list the location of the XML sitemap, thus making for more efficient crawling by the search engine robots.

Any instruction you add to the robots.txt file to omit pages from indexing will override the XML sitemap which still shows those pages.

Creating a Robots.txt file

If you don’t already have a robots.txt file set up, you should do so as a matter of urgency. It’s an essential part of your website. You can ask your web developer to set this up for you or, if you have the relevant know how yourself, follow these instructions:

- Create a new text file and save it as the name “ - you can use the Notepad program on Windows PCs or TextEdit for Macs and then “Save As” a text-delimited file.

- Upload it to the root directory of your website – this is usually a root level folder called “htdocs” or “www” which makes it appear directly after your domain name.

- If you use subdomains, you’ll need to create a robots.txt file for each subdomain.

Robots.txt file common instructions

Your robots.txt file will depend on the requirements you have for it.

There is therefore no set ‘ideal’ robots.txt file, but there are some common instructions that might be pertinent to you and that you could therefore include in your file. These are explained further below.

Setting the User Agent

Your robots.txt file will need to start with the ‘User-agent:’ command. This is used to give instructions to a specific search engine crawler or to all, such as User-agent: Googlebot. Googlebot is Google’s web crawler and this command simply means “Google: follow the below instructions”.

If you want to issue an instruction to all crawlers simply use User-agent:*

You can find a full list of search engine crawlers if you want to issue instructions to particular one to replace the * symbol in the User-agent: command.

Excluding Pages from being indexed

After your User-agent command, you can use and Allow: and Disallow: instructions to tell web crawlers which pages or folders not to index.

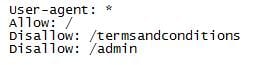

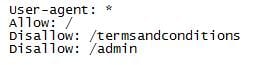

For example, to allow everything on your website to be crawled (by all web crawlers) but exclude certain pages (such as a terms and conditions page and an employee admin login page on your website), you would state in your robots.txt file:

In addition, if you don’t want certain file types on your website to be crawled such as PDF instruction manuals or application forms you can use Disallow:/*pdf$

Sitemap Location

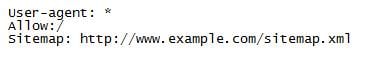

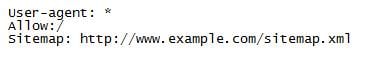

As discussed earlier, telling web crawlers where your XML sitemap is located is good SEO practice for your website. You can instruct this on your robots.txt file with:

This set of commands will allow everything on your website to be crawled by all search engine crawlers.

Common mistakes in Robots.txt files

- It’s very important that you fully understand the instructions which are used in a robots.txt file. Get it wrong, and you could damage or destroy your search visibility.

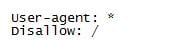

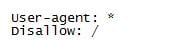

For example, if you use the following commands on your robots.txt file, you are instructing ALL web crawlers to ignore the ENTIRE domain.

- It is also worth bearing in mind that the robots.txt file is not meant to deal with security on your website. If you have areas of your site which need to be secured, you cannot rely on your robots.txt file to keep them hidden. In fact, adding their location to your robots.txt file would be inherently insecure. Instead, you need to ensure all areas of the website that need to be secured are done so using password protection.

- Remember, the robots.txt file is a guide and it is not guaranteed that these instructions will always be followed by all web crawlers.

If you’re still unsure, we highly recommend using this comprehensive guide in Google Webmaster Tools which covers more advanced commands and test what you currently have in place.

Thanks to

Ben Wood for sharing their advice and opinions in this post. Ben is the SEM Manager at Nottingham based agency

Hallam Internet. You can follow him on

Twitter , connect on

LinkedIn or

Google+.

Thanks to

Thanks to